Connect with us

Recent Posts

-

What A Search Engine Could See As Duplicate Content

Search engines can get confused when presented with a lot of highly similar content. If they find content at more than one location, or if there is marked similarity between content on two different urls, this may result in only one result being shown – and you have no guarantee it will be the one you would rather have displayed.

In some cases, if the search engine finds too much duplication as it crawls a site, it may give up and move on – leaving the rest of the site un-indexed. It just doesn’t make sense for them to waste resources crawling content that is already in their system, and the last thing Google wants is a SERP with the same result listed again and again.

You may have seen evidence of this in your own searches online – you are served up a notice that lets you know there are other results, but that they appear to be identical to ones already proffered. There are several different things that could cause a crawler to assume they are looking at duplicate content:

1. Product descriptions – This is a major problem on large ecommerce sites, due to the fact that most sellers or distributors will use the manufacturers description in their page text. This causes the pages to look outwardly identical, so try to rewrite product descriptions yourself whenever possible to stand out from the crowd. Also: title, meta descriptions, headings, navigation, and text that is shared globally can cause confusion if you are using a content management system that won‘t allow you to vary your meta descriptions on each page.

2. Printer friendly versions – These can be seen as duplicate content if you don’t disallow or no-index them. This is an example of something that is easily avoided with just a little care – so always ask yourself before you add content : “Do I want the search engine to see this?”

3. Server side include html RSS feeds – These should be replaced with a client side include such as java script, to avoid the RSS being picked up on numerous pages and mistaken for duplicate content.

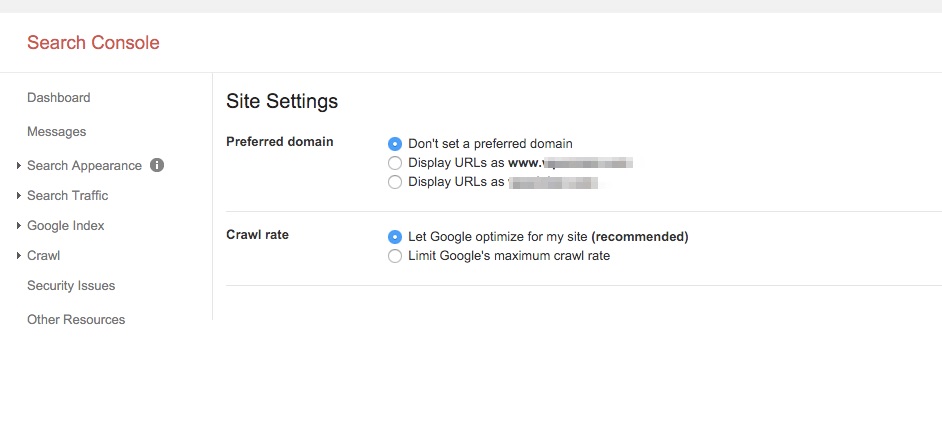

4. Different URLs pointing at the same page – Sometimes a crawler won’t realize it is crawling the same page because it can access it through different urls. A “canonical URL” should be the best one for the page to be indexed under, but in some cases the engines don’t see that there is more than one for a page and will continue indexing the same page under the different urls. This can also occur if you serve up a session ID to the crawler, which changes the url a little each time. Follow Google Webmaster Guidelines and don’t track the bots!

5. Copyright infringement – This is a huge problem that requires constant vigilance to root out offenders and reclaim your content, so subscribe to a service such as copyscape and search periodically for strings of unique text to find scrapers. Do realize that if you have free articles on your site that you allow others to use in return for a link, the syndicated copy could end up outranking your original. The filtering process does not always recognize which version was the first one!

6. Domains and sub domains with similar content – The bots may not recognize that you are trying to promote different brands or open new markets of they see several top level domains with a lot of the same content, so be careful! The same holds true for mirrored sites, though some of these are finally being ignored automatically.

Many duplicate content issues can be avoided simply by judicious use of the robot txt file to ensure that similar pages are simply ignored by the bots. Others require a little more time and effort to work through. Of course, the best option is to consider such issues as you build your site, and avoid any behavior that could lead to your site being left un-indexed due to perceived duplicate content.

Recent Posts

-

What are Top 2020 SEO Trends...

1.The ERA Of VOICE SEARCH Hello, 2020! Long gone are the days when we used to head over to the search engines on our desktops and

Read More -

Why Is SEO Super-Duper...

The year’s 2019! We have long laid our footsteps in this digital world. Did you know that more than 4 billion people al

Read More -

National SEO Services –...

Introduction about National SEO Services A National SEO service provider uses search engine optimization practice to enhance the

Read More